How Can Data and Automation Assist with Sustainability in Your Business

The entire world is facing the inevitable digital transformation, which has not just changed the daily…

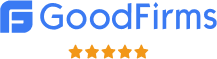

How Can Industrial Data Help Overcome All Business Challenges

Today, if we see the ongoing competition between industrial companies, we can easily underline the challenging…

What is the Impact of IoT Data Analytics on your Business?

Today, if we observe the trend and business processes, we can express that IoT solutions are…

How is Data Science for IoT Changing Business Outlook?

The Internet of Things has been noticed as a shape-changing technology that has changed the shape…